Written by Rosalie van Dijk

~ Trigger Warning: sexual trauma and suicide

Are modern chatbots suitable for sensitive situations in which people need a high level of emotional support and understanding?

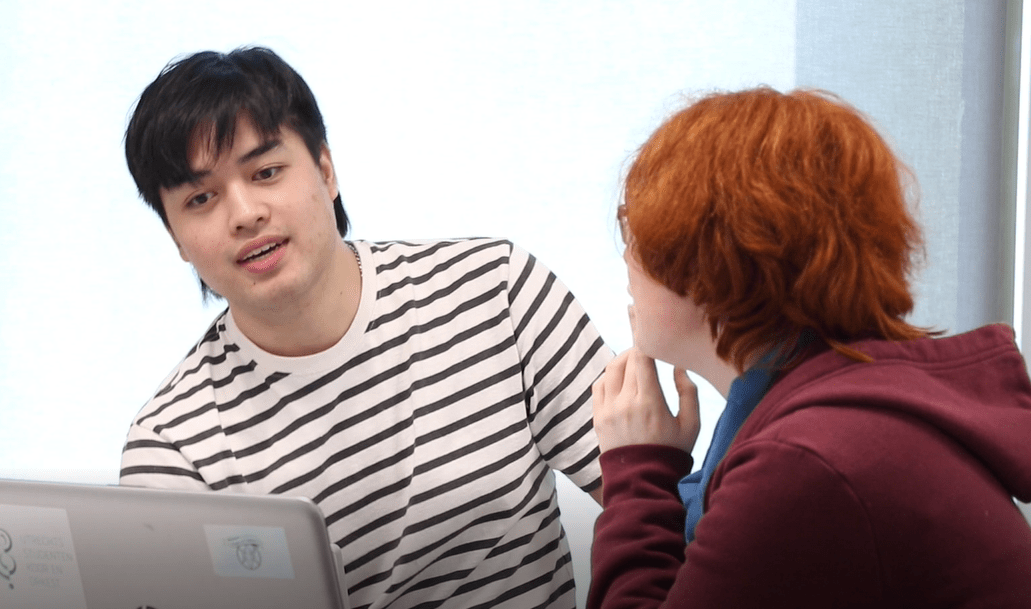

Chatbots were originally developed for customer support, but their 24/7 availability and accessibility also make them interesting for non-commercial use. Shakila Shayan, researcher at HEMD (Human Experience & Media Design), works with UMC Utrecht and Centrum Seksueel Geweld on a project which involves the use of chatbots as a way to help victims of sexual assault. Sarah Mbawa, also PhD researcher at HEMD, is also looking into chatbots, but more in the ecosystem of suicide-prevention. In this article we’ll look into both their research.

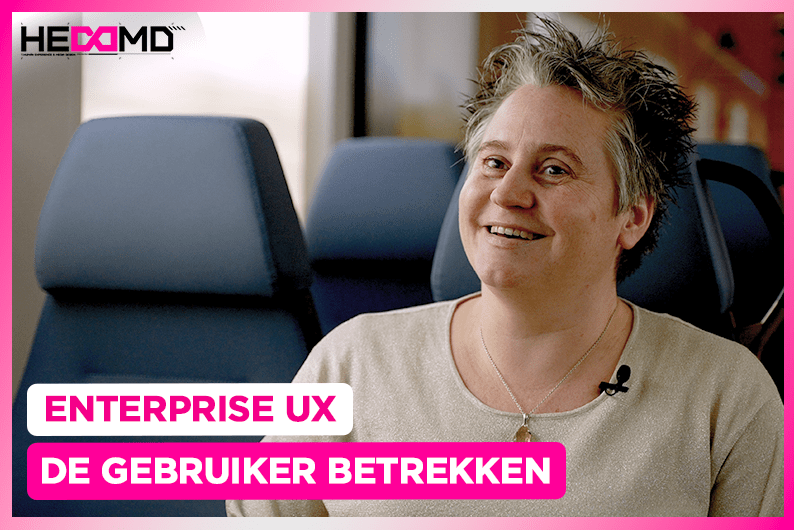

Shakila Shayan (left) en Sarah Mbawa (right)

Despite more than 100.000 reports of sexual assault a year in The Netherlands, many of the victims don’t report to the police or look for treatment, because of shame, guilt or fear of judgement. Victims avoid talking about their trauma, while their daily lives get interrupted by flashbacks, fear and sleeping problems. Untreated experiences of sexual assault could lead to severe mental problems, like PTSD.

Talking about a traumatic experience could feel like opening Pandora’s box. While reaching out for help, many victims struggle to put their experience into words. And this is an important step in their search for help. If victims could put their feelings into a narrative, they might eventually be able to control these intense emotions and will be more likely to look for professional help.

Shakila’s research focuses on developing a chatbot to support people who have experienced sexual assault in their process of self-disclosure and their search for professional help. The goal is to take away the barriers that victims face in their self-disclosure, like stigmatization, fear of judgement, blaming themselves and reliving the traumatic experience.

Modern chatbots fall short

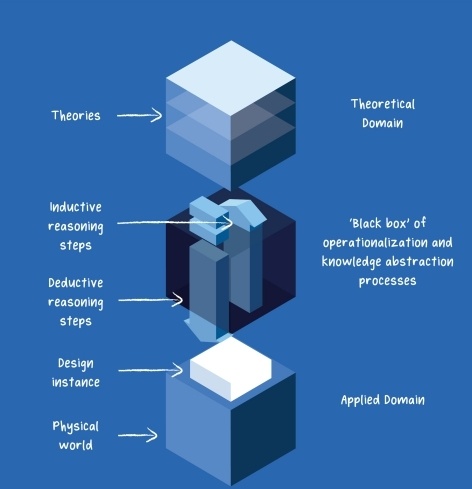

Chatbots, especially conversational agents (CA’s), can provide a level of non-judgemental support to start the dialogue, but often don’t have the human understanding that is needed for trauma-related conversations. Chatbots have trouble understanding facial expressions, body language and voices and are not good at crisis management. If there are safety concerns revealed in the conversation, or the user has become distressed as a result of the conversation, there is very little the chatbots can do.

Professional healthcare providers however, are able to do so. They can monitor their clients stress level, observe their body language and use stress releasing techniques to calm their clients and help them keep control of their emotions. Chatbots in the current market, mainly the advanced AI-based chatbots, don’t have this level of regulation. They start the conversation by inviting the user to openly keep talking about their trauma, which might get out of hand and could be harmful for the user.

Chatbot as facilitator

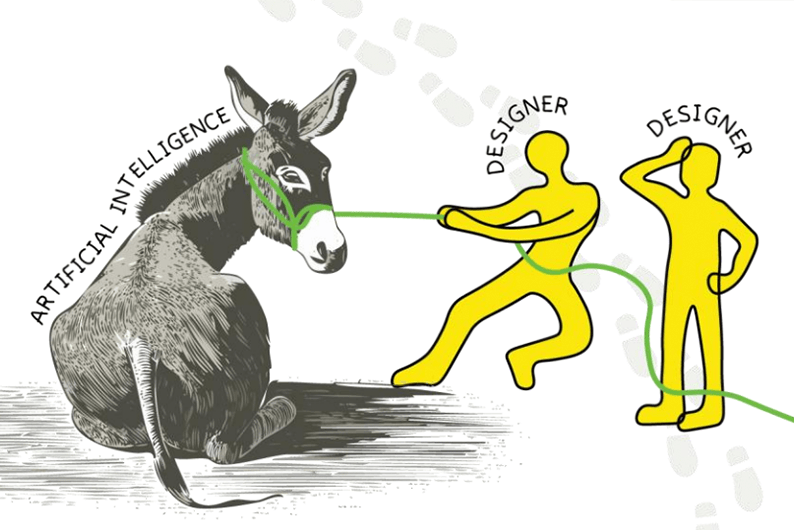

Shakila wants to use her research to design a chatbot in which she can replicate the regulation-practice of the therapists, to help individuals unravel their experience step by step and – very importantly – to only do so when the individual is ready for this. The only way this could work is in a strongly regulated and carefully designed dialogue that is pre-designed.

Together with conversation designers and digital storytelling companies, Shakila’s team aims to develop such a chatbot prototype that can help the individual to navigate their experience through the stories from other people. The idea is that stories could could be relatable for victims and help them to put their experience into words. This indirect approach lowers the threshold for these people to speak themselves. Therapists see this method as more accessible than direct questioning, because it reduces the pressure of explicitly telling what happened.

Co-creation: The next step

The next step is testing the prototype with clients, to judge if this system is effective and desirable. Ultimately, the team hopes to develop a full-fledged system that supports self-disclosure and could be featured on the website of Centrum Seksueel Geweld. The cooperation between users and professionals is essential and will be at the core of this research; mental healthcare is all about the practices of therapists and cannot be simply translated into technology. Every user brings new challenges that need a personalized approach.

Chatbots in suicide prevention

Sarah Mbawa, also researcher at HEMD, focuses on chatbots in suicide prevention. Having suicidal thoughts and talking about them with a bot is a sensitive subject where the user is possibly in a very vulnerable state. A chatbot can’t assess the gravity of the situation: Does this person need acute help? And if they do, a chatbot might not be able to directly call 113 or the police.

That is why Sarah’s research explores how this technology could be used in the context of suicide prevention and mental healthcare, particularly how chatbots could help with monitoring suicidal thoughts and facilitate connections with mental health-professionals, to avoid a crisis. Her research explores what roles chatbots could play in assisting help seekers and mental healthcare professionals and how designers can employ a user centered and multidisciplinary, collaborative approach in this context.

Guidelines for digital interventions:

- Don’t give therapeutic advice, but offer support in searching for professional help.

- Don’t create dependency by presenting a chatbot as a replacement for therapy.

- Offer personalized information, but without crossing the limits of digital support.

- Work together with users and professionals