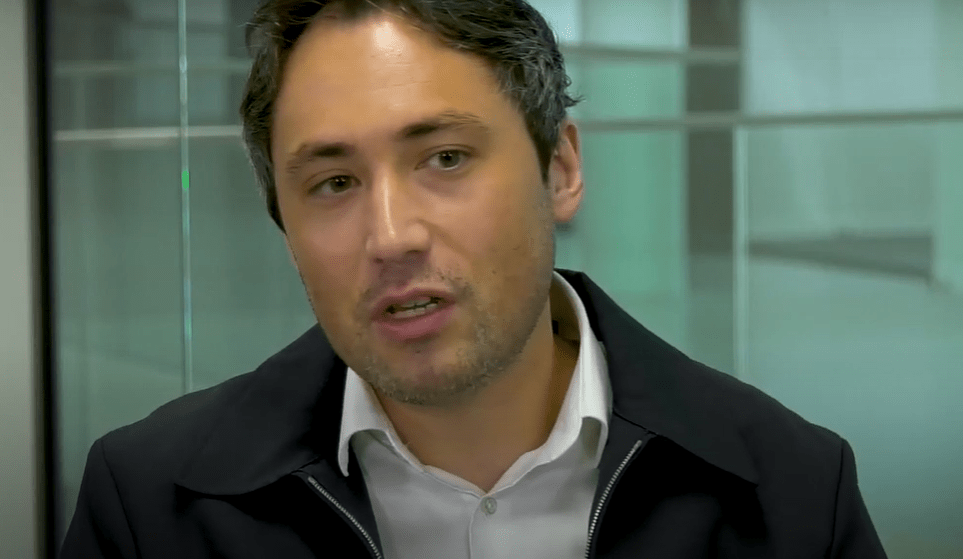

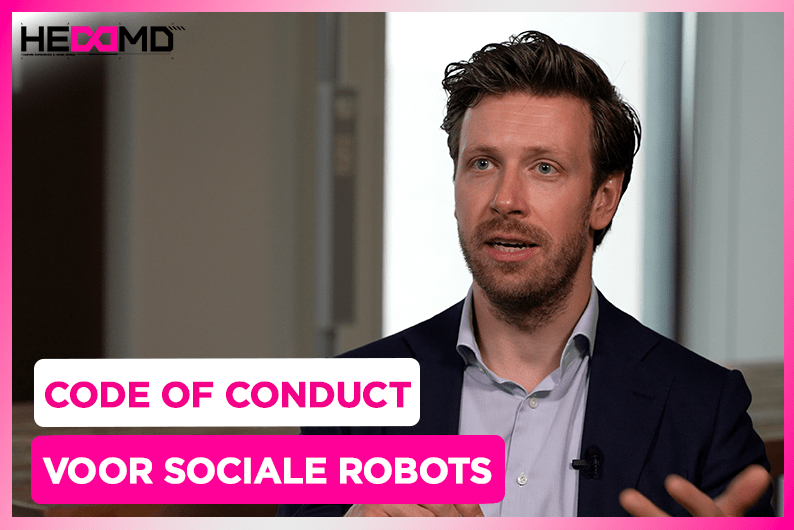

There is not an easy solution for data bias, and there is no one definition of data bias, says Dennis Nguyen, mission chair of the mission about data bias. ‘My mission was interested in how data is used to make decisions, and where this can go wrong. Because the data is, for example, not representative for the users in the focus. Like, for instance, the facial recognition systems to unlock our phones. That works very well for the majority of the people, but maybe it does not for people with big noses or a different skin colour. This big problem is called data bias.’

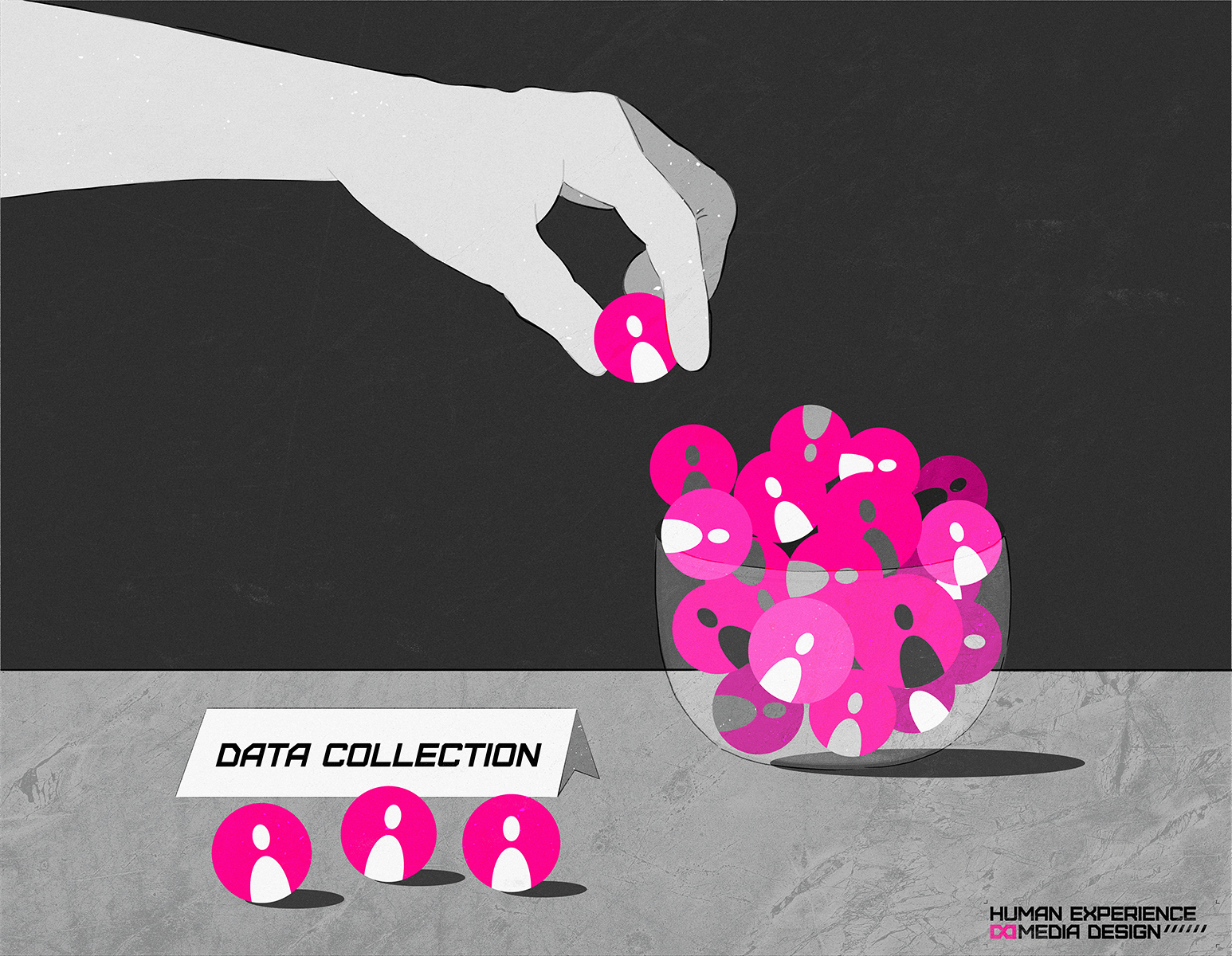

‘I think we have more and more of these data collecting systems emerging in health care, security, etc’, says the mission chair. ‘So one way or the other, it was designed for people with a purpose, and we need to start asking the question in this stage. About the purpose and the impact of it. Look at it from the design perspective in the sense of: who are the people that are making the decisions, how is data collected for these systems? We really try to keep it at this construct, building, design elements.

Nguyen and his team have talked with different experts, who build these systems. ‘And we also talked with researchers who looked at the impact of technology on people, especially of different colour than white, or with disabilities. What can go wrong if minorities in for example the health sector are not taken in the data system?’

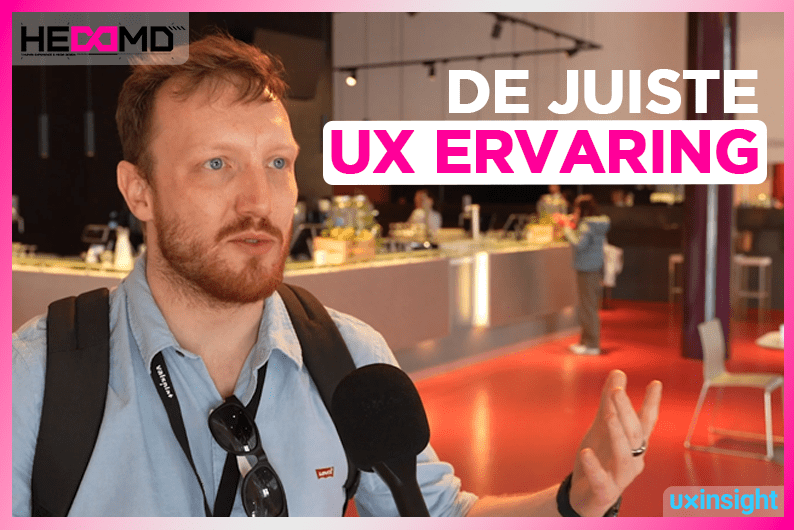

These kinds of questions and what the mission evoked by Nguyen can be found in the video below:

Here you will find all the other items of this mission: