How to Build Inclusive Digital Technology?

Imagine trying to unlock your phone with your fingerprint, but it just won’t scan it because your thumb is too small. Or you keep repeating the same phrase over and over again, but your mobile phone’s voice assistant still doesn’t “understand” what you’re trying to say.

Some of us are familiar with these kinds of glitches that lower our user experience. Mostly, we do not think much of it. We either adjust or simply replace the product. However, sometimes these annoying things happen not because the technology is necessarily “broken” or is “designed poorly”. Quite to the contrary, it might very well be the case that a device or digital service was designed that way. The problem is then that you are the “error”, that you simply do not match the “regular user” the creators had in mind. The device in front of you acts differently towards you than towards the general public.

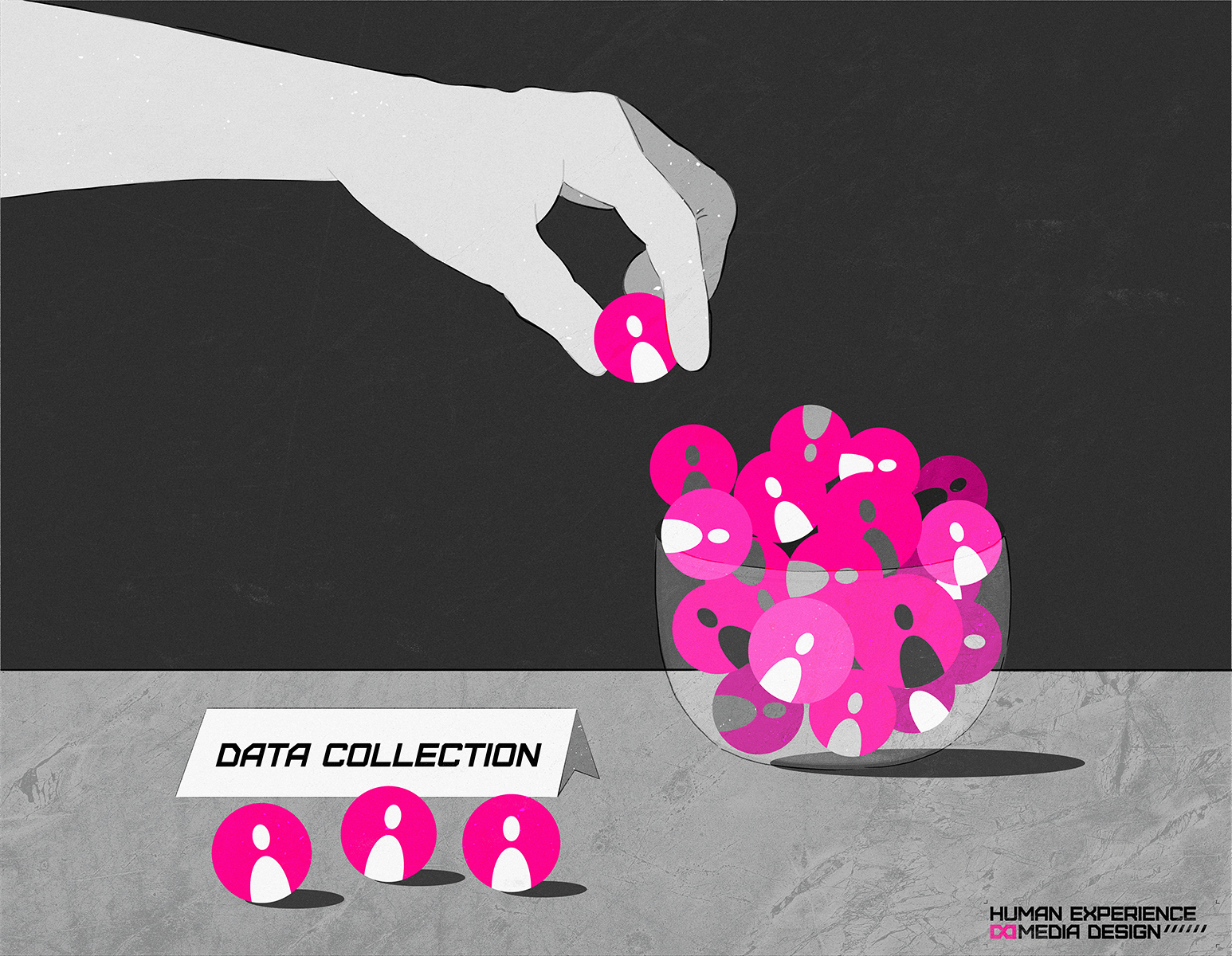

In this case you may experience a (mild) form of data bias: data-driven systems that are not accessible for everybody and exclude or even discriminate against users who do not fit the “standard”. In the above examples, the stakes are relatively small. Still, the same problem can negatively impact people in other contexts. There are plenty of examples: voice recognition systems that cannot “understand” minority accentsfacial recognition solutions that are blind for people of colour, algorithms that are biased against women, or digital designs that are insensitive for the needs of elderly people. Data bias occurs in various sectors and situations. It either excludes certain types of people –or categorises them in an unfavourable way. For example, when people with a migration background are considered as less trustworthy when it comes to paying taxes or credit worthiness.

(Photo by ryoji-iwata)

As a researcher, I am interested in the impact of data and technology on people in a social and cultural respect, i.e., how they shape our relationships and how we look at each other. I think that data bias is an urgent (and fascinating) problem that professionals, regulators, and the public should pay attention to.

I have always been interested in how society defines its “standards” and how the systems we build react to “misfits”. This is to some extent influenced by my very personal experience: growing up in a mixed raced family (Asian father, European mother) in Germany of the 1990s, frequently put me in situations where I simply did not fit in. Over the years, I built some sensitivity for categorisations, stereotypes, deceptive assumptions, and thus biases throughout my life. It inevitably steers my views, approaches, and professional interests. And we see more situations emerging in which these challenges become concrete -with digital technology taking centre stage in the relationships that we build.

If you talk in your native accent and your voice assistant does not understand you, you have two choices: change the way you speak or stop using the technology. If a facial recognition app cannot “read your face”, it is simply useless for you. These issues feel annoying, and a user becomes a non-user very quickly. However, in the worst cases biased digital technology can appear explicitly racist (as happened with Google’s image recognition algorithm), sexist (for example, Amazons data-driven recruitment tool), ableist (e.g. when data-driven tech is not accessible for disabled people), or ageist (also in e.g. recruitment and usability of tech). These are real and hurtful experiences for the individuals at the receiving end.

Data bias also affects user experience. Designers of user-centric, data-driven technologies are not always aware of the negative impacts that they have on people;, or they downplay and ignore them. The stakes are high, however: a company may cause real harm to people, which is by itself already bad enough. But there are also costs in reputation, loss of public support, and even potential legal penalties to consider. None of that is desirable for designers. Understanding data bias, its complexity and variety, and how to develop counterstrategies can help with avoiding damage for all sides involved.

Problems of data bias emerge when creators of technology put a little too much trust into their assumptions about who represents most users and fail to consider people that may sit outside of that mainstream. Or even worse, actively decide to exclude them. Data biases result from conscious or unconscious choices among creators of technology. However, economic imbalances and access to technology are additional factors to consider. For example, in some regions, communities have sensors to collect data about e.g. air quality; if the quality drops, they can intervene. Other communities do not have the means to monitor their environment and have thus no chance to protect their environment as efficiently.

Data bias can affect the individual user (or customer or citizen) but also larger parts of society. It can be a result of ignorance, naivety, or plain malevolence. Some forms of data bias are caused by personal choices of tech creators, while many are the outcome of complex systemic issues in how society at large deals with inclusion and exclusion. More often than not, hard business goals play an important role, too.

Understanding Data Bias

In my mission, I will explore different forms of data bias and their causes. For this, I will investigate and compare how different private and public organisations deal with the issue. This is a necessary first step before we can develop remedies. I will focus especially on user experiences (UX) of digital designs in different sectors. Guiding questions are:

- How do design choices (involuntarily) contribute to data bias?

- How do research practices such as “personas” in user experience design relate to bias?

- How do UX designers define “standards” when it comes to designing of user groups?

I am less concerned with finding technical, algorithmic solutions to the challenges of data bias (and discrimination). I rather want to focus on the social and cultural factors:

- How do designers make and justify their choices?

- How transparent and exhaustive is their preliminary research on target audiences?

- What sensibilities are missing –and when do either economic and/or political factors outweigh ethical considerations?

I try to achieve this by talking to designers, organisations. users, and through critically analysing processes that can lead to biases. I am supported in this effort by my colleague Levien Nordeman, who is a passionate educator and critical thinker with whom I share a keen interest in how data is changing society.

Finding Solutions

I am curious about the ways in which people look at and think about the digital transformation. I am equally interested in how technologies and the organisations behind them gaze at people as users. Through understanding the different sides, we can identify problems as well as misunderstandings and start looking for solutions.

Professionals in the field are neither ignorant nor insensitive towards the risks of digital technologies. More data-driven organizations and their designers explore how to practice ideas such as “privacy by design” and actively seek ways to increase transparency and discuss data ownership. This is a starting point to raise the position of users to the level of a partner in a dialogue about data: what they represent and what can be done with them. There is growing awareness that data are not just an objective “thing” but that is matters how people who collect them also make sense of them.

However, researchers and practitioners also need to explore what strategies help with avoiding biases and how to include a broad spectrum of different people. We want digital technology to be able to distinguish between us; we want them to recognize who we are and offer the same service quality for everybody. What we don’t want are racist, sexist, ableist, ageist and overall exclusionary designs. We don’t want “blind” algorithmic solutions that run user-centric devices. But it all starts even before the first line of the algorithm is written: at the conceptualisation and ideation stages of any project. We need to understand and challenge the underlying assumptions of individual practitioners and their organisational cultures.

As users, we rely on designers who critically reflect on data bias and ideally embrace ideals of inclusion by design. This mission tries to make a humble contribution to support them in this effort.

De producties voor deze missie worden ondersteund door redacteur Aaron Golub

Here you will find all the other items of this mission: