A Conversation with Dr. Cynthia Bennett

Data-driven technologies are often hailed as efficient solutions that can uplift the quality of life for different people. That is the big promise of technology after all: to enable, to empower, to liberate, and to create “value”. If taken at face value, a great diversity of people seems to indeed benefit from technological advances in datafication, automation, and robotics. That includes demographic minorities. For example, there is a growing number of “assistive technologies” that address various disabilities, such a prosthetics or smart wearables for people that deal with hearing impairments. Novel tech innovations of similar nature pop-up on an almost daily basis.

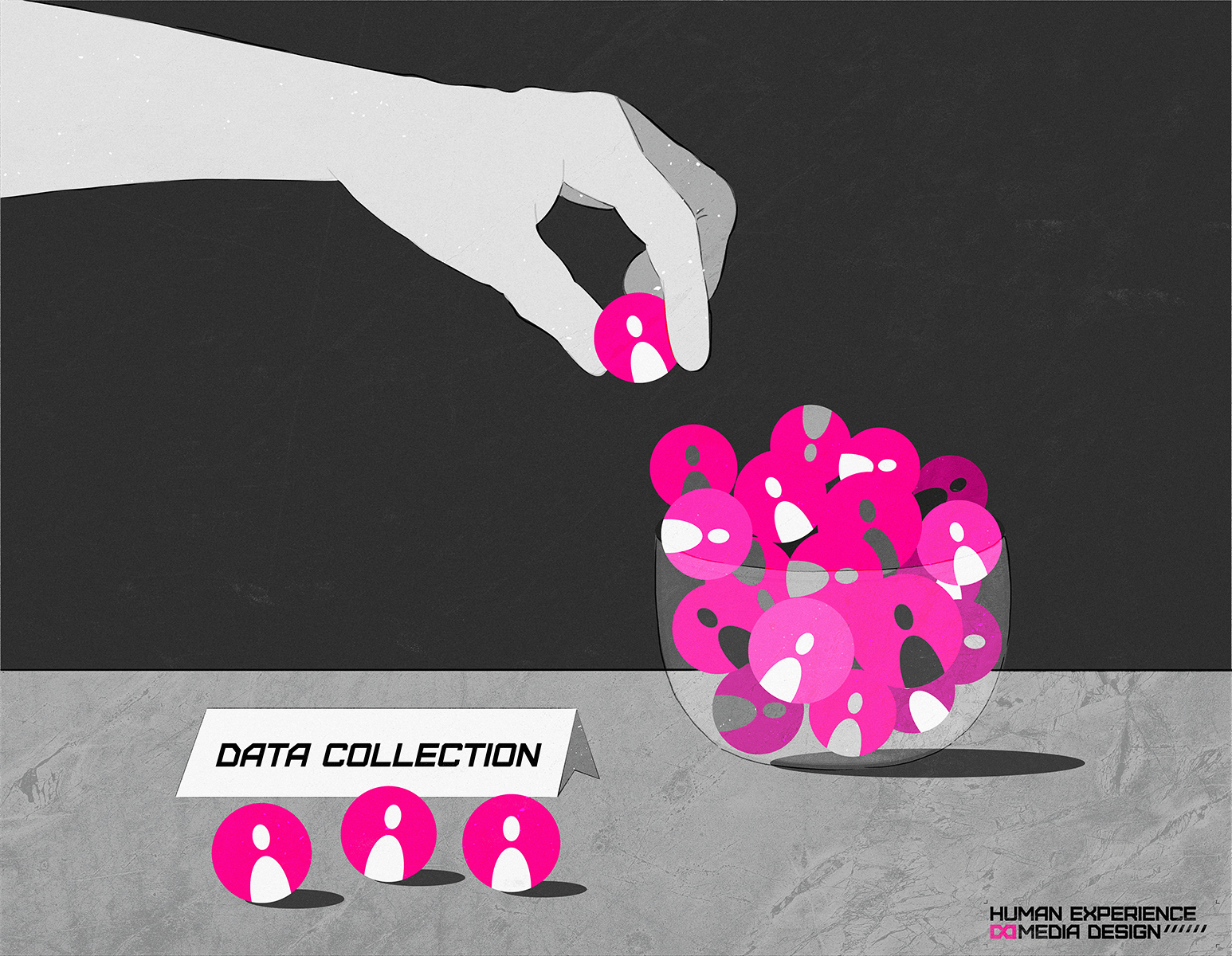

However, while technology can make a tangible positive difference in some of these contexts, oftentimes it can also put certain groups of people at a disadvantage. Not all technology is designed to be inclusive, and, at worst, it can have considerably negative effects on people’s lives. Current data-driven technologies can create new or exacerbate existing inequalities.

Harmful effects of tech

We talked about the harmful effects of technology and data bias with Dr Cynthia Bennett, a postdoctoral researcher at Carnegie Mellon University in the USA. Dr. Bennett dedicates her work to finding answers for difficult questions about inclusion, equality, and expanding access to the benefits of technological designs with emphasis placed on disabled people. She makes a strong argument for why we need more critical thinking about the impact of technology and our biases. Only then can we find ways to innovate without causing harm and broaden access to the benefits of technology. Listen to our conversation here:

Lister here to the podcast or read the transcript below:

Dennis Nguyen (DN): We have here today a researcher from Carnegie Mellon University, Cynthia Bennett, who works a lot on digital inclusion and digital technology. We invited Cynthia to talk a bit about the topic of data bias, which is a very important issue if you look at the professional and academic discourse. It is also a bit of a confusing issue that made waves in news media as some incident where people experienced discrimination. We are excited to have you here Cynthia!! Before we dive directly into the topic, maybe you can tell us a little bit more about yourself and your work.

Cynthia Bennett (CB): Thanks for having me! So, I am a postdoctoral researcher at Carnegie Mellon University’s Human-Computer-Interaction Institute and my research has two threads right now: the first one is that I am interested in raising participation of people with disabilities in Computer Science and the tech industry, and the way that plays out in my research is that I look at the processes and cultures that are embedded in technology research and development and show how they are usually not very accessible. We tend to expect people with disabilities are only end-users of our technology but not necessarily the designers, developer, and researchers. So, that’s one part of my research programme: understanding these cultures better and changing the processes that we use to be more accessible. The second part is more of a newer and growing area and it around AI bias and specifically how it impacts people with disabilities.

DN: When you hear the term “data bias”, what does it make you think of? What is data bias also in connection to your work?

CB: Data bias to me refers to how data that we use, in this case to train machine learning models, how that represents and reflects the people that are impacted by the decision made based on that data and the algorithms that use that data. So, one way that this shows up in my research is around image descriptions. So, blind people often cannot see images and they rely on, we rely on – I am a blind person as well -, textual descriptions of images to be included in that form of media. And machine learning has been posed as a potential solution. What is machine learning models can automatically generate descriptions of images all-over the Internet? That would solve a huge problem, because most people do not write description of their images. Please write descriptions of your images, because your human description is way better than, you know, machine learning automatic that know nothing about the context or social factors etc. I’ve noticed different companies and researchers start to release products that generate these automatic image descriptions. I also stared learning about research on gender- and racial bias in AI as well as a growing awareness that we know don’t know much about disability bias in AI. It’s a growing and under-researched field. So, if we think about image descriptions: sometimes information about the people in the image might be really important to understand the image. Sometimes it might concern how they present their gender or their race or their disabilities. Again, as I mentioned before, I was seeing research to automate image descriptions and I was reading research on racial, gender and disability bias and I wasn’t seeing these conversations coming together. In a study I did last year I interviewed blind people, so people that benefit from image description, but who are also a minority gender or race. So, I interviewed people wo identified as non-binary or transgender and who identify as being black or indigenous or a person of colour. We talked about their experiences of being represented and misrepresented. There are experiences where people try to assume aspects of their identity and how it impacted them when this assumption was wrong. And we talked about their preferences for how image descriptions should talk race, gender, and disabilities. There are a lot of different contextual factors that matter: whether that information is relevant, it matters whether the person or the machine generating the descriptions knows any confirmable details about how the person identifies…there all these factors that matters and the participants had a lot of concerns. Some of the main concerns were that, again, a lot of the models used to identify gender, which is a really good example, they are trained on data that only has two genders. So, the data is only labelling images of male or female. When you train a model on that data, then the model has a bias. And that bias is that there are only two genders and when you train a model that only knows two genders and then you use that model to generate image descriptions that only has two genders – that can really make people feel hurt if they are misgendered. This was maybe a micro-aggression when that happened only once, but these participants talked about “what if this is happening in all my photos? I have hundreds of photos!” Or what if I am looking at a photo of somebody and I get the wrong information about their gender and other aspects that the machine learning algorithm gets wrong. So, when I think of data bias, I think about how it represents the people that it impacts. In this case, data that only includes two genders – that has an impact ton people! And it may impact people in different ways, such as for example if you are a minority gender, like non-binary people that have a long of being discriminated against. Impact to me also not only concerns users of the system, like blind people reading the image descriptions, but everyone who is affected by that: so, people who are in the images. Maybe they are not blind, but they are still affected by that data bias, because they are being subjected to decisions based on that data.

DN: There is the harmful effect that you already described: people maybe perceive them first as micro-aggressions and then they feel it becomes something bigger that is harmful to them. Are there other examples where you see data bias putting people at risk?

CB: As I mentioned in the previous example, there can be very personal harm by being misrepresented. But this has very material consequences. If you are not represented and decisions are made – you just might be completely left out of those decisions! And when you are left out you can be cast off as “not doing the right thing” or even doing the “wrong thing”. Some examples that have been particularly relevant in the USA are hiring tools and test proctoring software. These are tools that companies and institutions use to automate the process of deciding: is this job candidate qualified? Are they exhibiting qualities that we want at our company? And similarly with the test proctoring example: evaluating if somebody is behaving ethical, in other words “are they cheating on this test?” The Center for Democracy and Technology has written a report these issues exactly and what we noticed is that disability is not even considered a potential experience that someone might be having. So maybe some behaviours like not being able to make eye-contact – I for example can’t make eye-contact, I am blind – that could grade me lower as a candidate. Or maybe if I am taking a test and my eyes are not on the camera, people may think my eyes are somewhere else where they shouldn’t be. That can happen if you are misrepresented or left out of data and that can have very real consequences that we have seen in employment, education, housing, healthcare.

DN: Data bias is a very complex problem which has very real effects on people. It can make a negative difference in their lives. It makes me wonder: where does it come from? What are the causes?

CB: I often attribute data bias to history and culture. We might think: “oh, if data is biased, let’s collect more data and it will not be biased!” In some cases, maybe that’s good but in many cases it doesn’t matter how representative your data is. If it’s being used, it is probably going to replicate the history and the culture without very intentional mediation of how that data is to be used and how the algorithm works. I particularly learn from historians of science and race. Simone Brown has a book called “Dark Matters” where she traces the surveillance of black people in the USA dating back to something that’s been happening since slavery. So, if we have this history where it is very embedded in our culture that it is okay to surveil and scrutinise people based on their race, in this case black people specifically, that becomes built into our systems. It is kind of an incarnation, a next step in our history and culture. We see this happening in the USA but anytime you have system deciding where police should go spend their time, that’s based on historical data. We have already identified that areas where black and brown people live in the USA are over-policed. So, if your data is filled with where the police was everywhere in the past, it has nothing to do with whether it was right for them to be there, whether they had any good reason to be there – it’s just the fact that they were there and that’s the data the system is built on. Addressing these problems really requires a addressing our culture and reckoning with our history and creating new histories that build a different world. It is not just about collecting more data and writing different algorithms. Data bias and AI is just a new iteration of classifying people and making decision based on how we classify them and how we value them. That’s been happening non-technically forever.

DN: How can people protect themselves? Is that even possible?

CB: I don’t think I have anything super low level but just to reiterate: I agree with raising awareness that the public should have better knowledge of what are the consequences of their use of technology. But the solutions have to come from the institutions. We individuals we can be more aware but we have limited power on our own. We have to combine and make institutions use their collective power. I just want to be clear: the responsibility is not individual.

DN: What should tech creators consider before they put something out into the public

CB: Some technical innovation that could help, but also policy, is to make it possible for certain technology and certain features to be used by the people who need them, while preventing those solutions to be used by people who can exploit them. I have a concrete example: I’ve just done a research project about the impact of delivery robots on accessible public space. So, there’s been some case studies where people with disabilities have been walking or using their wheelchair and there is an autonomous delivery robot in their pathway, and they can’t get past. Sometime this can be very dangerous. One of the things we talk about in this paper is how open data (e.g., open street map in the US) about accessible pathways is considered really empowering for people with disabilities to plan their route. But the autonomous robots – how do you think they develop their testing routes? These robots rely on curb cuts, they rely on ramps. The same technology that provides access to people with disabilities is now a tool to move these robots through public space. That is becoming an access hazard. So, that is an example I’m currently thinking about. Ok, we want to collect data about spaces that are accessible. Now let’s think about who needs this data and who doesn’t need this data. Some of the solutions will come from regulation and technical capability to limit who has access to what and to regulate acceptable use and unacceptable use.

De producties voor deze missie worden ondersteund door redacteur Aaron Golub

Here you will find all the other items of this mission: